Computers have been digital for half of a century. Why would everybody choose to resurrect the clunkers of yesteryear?

WHEN OLD TECH dies, it commonly stays dead. No one expects rotary telephones or including machines to come crawling again from oblivion. Floppy diskettes, VHS tapes, cathode-ray tubes—they shall relax in peace. Likewise, we won’t see ancient analog computer systems in fact facilities each time soon. They have been gigantic beasts: challenging to program, pricey to maintain, and restrained in accuracy.

Or so I thought. Then I got here through this confounding statement:

Bringing lower back analog computer systems in lots greater superior types than their historical ancestors will trade the world of computing notably and forever.

Seriously?

I observed the prediction in the preface of a good-looking illustrated e-book titled, simply, Analog Computing. Reissued in 2022, it used to be written by using the German mathematician Bernd Ulmann—who appeared very serious indeed.

I’ve been writing about future tech on the grounds that earlier than WIRED existed and have written six books explaining electronics. I used to improve my personal software and some of my pal’s diagram hardware. I’d in no way heard everybody say something about analog, so why would Ulmann think that this very useless paradigm should be resurrected? And with such far-reaching and everlasting consequences?

I felt compelled to look at it further.

AN EXAMPLE of how digital has displaced analog seems to be photography. In a pre-digital camera, non-stop editions in mild created chemical reactions on a piece of film, the place a picture seemed as a representation—an analog—of reality. In a contemporary camera, by means of contrast, the mild variants are transformed to digital values. These are processed through the camera’s CPU earlier than being saved as a circulate of 1s and 0s—with digital compression if you wish.

Engineers commenced the use of the phrase analog in the Nineteen Forties (shortened from analog; they like compression) to refer to computer systems that simulated real-world conditions. But mechanical gadgets had been doing a good deal the equal factor for centuries.

The Antikythera mechanism was once an astonishingly complicated piece of equipment used for hundreds of years in the past in historic Greece. Containing at least 30 bronze gears, it displayed the everyday actions of the moon, sun, and 5 planets whilst additionally predicting photovoltaic and lunar eclipses. Because its mechanical workings simulated real-world celestial events, it is considered as one of the earliest analog computers.

As the centuries passed, mechanical analog gadgets had been fabricated for earthlier purposes. In the 1800s, an invention known as the planimeter consisted of the little wheel, a shaft, and a linkage. You traced a pointer around the area of a structure on a piece of paper, and the vicinity of the structure used to be displayed on a scale. The device grew to become a vital object in real-estate places of work when shoppers desired to understand the acreage of an irregularly fashioned piece of land.

Other devices served navy needs. If you had been on a battleship making an attempt to purpose a 16-inch gun at a goal past the horizon, you wanted to determine the orientation of your ship, its motion, its position, and the route and pace of the wind; sensible mechanical elements allowed the operator to enter these elements and alter the gun appropriately. Gears, linkages, pulleys, and levers ought to additionally predict tides or calculate distances on a map.

In the 1940s, digital elements such as vacuum tubes and resistors have been added, due to the fact a fluctuating cutting-edge flowing via them may want to be analogous to the conduct of fluids, gases, and different phenomena in the bodily world. Various voltages should characterize the pace of a Nazi V2 missile fired at London, for example, or the orientation of a Gemini house tablet in a 1963 flight simulator.

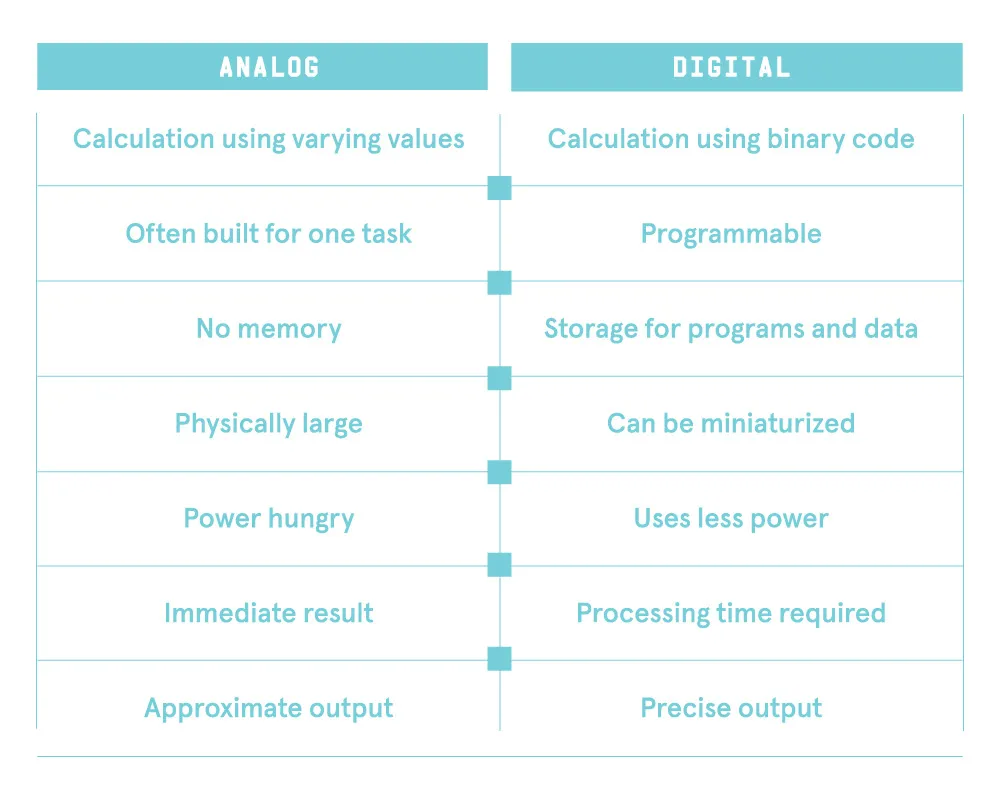

But by using them, analog had emerged as a loss of life art. Instead of the usage of a voltage to symbolize the pace of a missile and electrical resistance to characterize the air resistance slowing it down, a digital pc may want to convert variables to binary code—streams of 1s and 0s that had been appropriate for processing. Early digital computer systems had been large mainframes full of vacuum tubes, however then built-in circuit chips made digital processing cheaper, extra reliable, and greater versatile. By the 1970s, the analog-digital distinction may want to be summarized like this:

The ultimate thing was once a massive deal, as the accuracy of analog computer systems was once continually confined by using their components. Whether you used equipment wheels or vacuum tubes or chemical film, precision used to be restricted by means of manufacturing tolerances and deteriorated with age. The analogy was once continually modeled on the actual world, and the world was once in no way honestly precise.

WHEN I WAS a nerdy British schoolboy with a moderate case of OCD, inaccuracy afflicted me a lot. I revered Pythagoras, who advised me that a triangle with facets of three centimeters and four centimeters adjoining a 90-degree perspective would have a diagonal aspect of 5 centimeters, precisely. Alas, my pleasure diminished when I realized that his proof was solely utilized in a theoretical realm the place traces had been of zero thickness.

In my daily realm, precision was once confined by means of my capability to sharpen a pencil, and when I tried to make measurements, I ran into every other bothersome function of reality. Using a magnifying glass, I in contrast the ruler that I’d offered at a stationery shop with a ruler in our school’s physics lab, and found that they have been no longer precisely the identical length.

How may want this be? Seeking enlightenment, I checked the records of the metric system. The meter used to be the critical unit, however, it had been birthed from a weird mixture of nationalism and whimsy. After the French Revolution, the new authorities instituted the meter to get away from the imprecision of the ancien régime. The French Academy of Sciences described it as the longitudinal distance from the equator, via Paris, to the North Pole, divided through 10 million. In 1799, the meter was once solemnified like a spiritual totem in the structure of a platinum bar at the French National Archives. Copies have been made and disbursed across Europe and to the Americas, and then copies had been made of the copies’ copies. This manner delivered transcription errors, which in the end led to my tense discovery that rulers from extraordinary sources may be visibly unequal.

Similar issues impeded any definitive dimension of time, temperature, and mass. The conclusion used to be inescapable to my adolescent mind: If you had been hoping for absolute precision in the bodily realm, you couldn’t have it.

My private time period for the inexact nature of the messy, fuzzy world was once muzzy. But then, in 1980, I obtained an Ohio Scientific computing device laptop and observed prompt, lasting relief. All its operations had been constructed on a basis of binary arithmetic, in which a 1 was once usually precisely a 1, and a zero used to be an actual 0, with no fractional quibbling. The 1 of existence, and the zero of nothingness! I fell in love with the purity of digital and realized to write code, which grew to become a lifelong refuge from muzzy math.

Of course, digital values nonetheless had to be saved in fallible bodily components, however, margins of error took care of that. In a present-day 5-volt digital chip, 1.5 volts or decrease would signify the range zero whilst 3.5 volts or increase would characterize the variety 1. Components on a decently engineered motherboard would continue to be inside these limits, so there shouldn’t have been any misunderstandings.

Consequently, when Bernd Ulmann estimated that analog computer systems had been due for a zombie comeback, I wasn’t simply skeptical. I observed the concept as a bit … disturbing.

HOPING FOR A actuality check, I consulted Lyle Bickley, a founding member of the Computer History Museum in Mountain View, California. Having served for years as a professional witness in patent suits, Bickley keeps an encyclopedic know-how of the whole lot that has been finished and is nevertheless being finished in facts processing.

“A lot of Silicon Valley groups have secret tasks doing analog chips,” he advised me.

Really? But why?

“Because they take so little power.”

Bickley defined that when, say, brute-force natural-language AI structures distill tens of millions of phrases from the internet, the method is insanely energy hungry. The human intelligence runs on a small quantity of electricity, he said, about 20 watts. (That’s equal to a mild bulb.) “Yet if we strive to do the equal issue with digital computers, it takes megawatts.” For that variety of applications, digital is “not going to work. It’s now not a clever way to do it.”

Bickley stated he would be violating confidentiality to inform me of specifics, so I went searching for startups. Quickly I observed a San Francisco Bay Area agency referred to as Mythic, which claimed to be advertising and marketing the “industry-first AI analog matrix processor.”

Mike Henry co-founded Mythic at the University of Michigan in 2013. He’s a full-of-life man with a neat haircut and a well-ironed shirt, like an old-time IBM salesman. He extended on Bickley’s point, citing the brain-like neural community that powers GPT-3. “It has one hundred seventy-five billion synapses,” Henry said, evaluating processing factors with connections between neurons in the brain. “So each and every time you run that mannequin to do one thing, you have to load a hundred seventy-five billion values. Very giant data-center structures can barely hold up.”

That’s because, Henry said, they are digital. Modern AI structures use a kind of reminiscence referred to as static RAM, or SRAM, which requires regular strength to shop data. Its circuitry ought to continue to be switched on even when it’s no longer performing a task. Engineers have executed a lot to enhance the effectiveness of SRAM, however, there’s a limit. “Tricks like decreasing the grant voltage are jogging out,” Henry said.

Mythic’s analog chip makes use of much less energy by using storing neural weights no longer in SRAM but in flash memory, which doesn’t eat electricity to continue its state. And the flash reminiscence is embedded in a processing chip, a configuration Mythic calls “compute-in-memory.” Instead of eating a lot of electricity shifting thousands and thousands of bytes lower back and forth between reminiscence and a CPU (as a digital pc does), some processing is performed locally.

What afflicted me was that Mythic appeared to be reintroducing the accuracy issues of analog. The flash reminiscence used to be now not storing a 1 or zero with relaxed margins of error, like old-school good judgment chips. It was once keeping intermediate voltages (as many as 256 of them!) to simulate the various states of neurons in the brain, and I had to marvel at whether or not these voltages would waft over time. Henry didn’t appear to suppose they would.

I had other trouble with his chip: The way it labored used to be difficult to explain. Henry laughed. “Welcome to my life,” he said. “Try explaining it to task capitalists.” Mythic’s success on that front has been variable: Shortly after I spoke to Henry, the agency ran out of cash. (More currently it raised $13 million in new funding and appointed a new CEO.)

I subsequently went to IBM. Its company PR branch related me with Vijay Narayanan, a researcher in the company’s physics-of-AI department. He favored engaging with the aid of company-sanctioned electronic mail statements.

For the moment, Narayanan wrote, “our analog lookup is about customizing AI hardware, especially for strength efficiency.” So, the identical purpose is Mythic. However, Narayanan appeared alternatively circumspect on the details, so I did some greater studying and observed an IBM paper that referred to “no considerable accuracy loss” in its reminiscence systems. No considerable loss? Did that suggest there was once some loss? Then there was once the sturdiness issue. Another paper referred to “an accuracy above 93.5 percentage retained over a one-day period.” So it had misplaced 6.5 percent in simply one day? Was that bad? What has to it be in contrast to?

So many unanswered questions, however, the largest letdown was once this: Both Mythic and IBM regarded fascinated by analog computing solely insofar as particular analog tactics may want to decrease the strength and storage necessities of AI—not operate the integral bit-based calculations. (The digital factors would nonetheless do that.) As some distance as I ought to tell, this wasn’t something shut to the 2nd coming of analog as anticipated with the aid of Ulmann. The computer systems of yesteryear may additionally have been room-sized behemoths, however, they may want to simulate the entirety from the liquid flowing thru a pipe to nuclear reactions. Their purposes shared one attribute. They have been dynamic. They worried about the thinking of change.

ANOTHER CHILDHOOD CONUNDRUM: If I held a ball and dropped it, the pressure of gravity made it go at a growing speed. How ought you discern the complete distance the ball traveled if the pace used to be altering constantly over time? You may want to spoil its trip down into seconds or milliseconds or microseconds, work out the velocity at every step, and add up the distances. But if time clearly flowed in tiny steps, the pace would have to leap immediately between one step and the next. How may want that be true?

Later I realized that these questions had been addressed with the aid of Isaac Newton and Gottfried Leibniz centuries ago. They’d stated that pace does alternate in increments, however, the increments are infinitely small.

So there have been steps, however, they weren’t simply steps? It sounded like an evasion to me, however, on this iffy premise, Newton and Leibniz developed calculus, enabling everybody to calculate the conduct of limitless naturally altering components of the world. Calculus is a way of mathematically modeling something that’s constantly changing, like the distance traversed by way of a falling ball, as a sequence of infinitely small differences: a differential equation.

That math may want to be used as the entry to old-school analog digital computers—often called, for this reason, differential analyzers. You should plug aspects collectively to characterize operations in an equation, set some values for the use of potentiometers, and the reply ought to be proven nearly straight away as a hint on an oscilloscope screen. It would possibly no longer have been ideally accurate, however in the muzzy world, as I had discovered to my discontent, nothing used to be ideally accurate.

To be competitive, an actual analog laptop that should emulate such versatile conduct would have to be appropriate for less expensive mass production—on the scale of a silicon chip. Had such an issue been developed? I went lower back to Ulmann’s e-book and located the reply on the penultimate page. A researcher named Glenn Cowan created a true VLSI (very large-scale built-in circuit) analog chip returned in 2003. Ulmann complained that it used to be “limited in capabilities,” however it sounded like the actual deal.

GLENN COWAN IS a studious, methodical, amiable man and a professor in electrical engineering at Montreal’s Concordia University. As a grad scholar at Columbia again in 1999, he had a desire between two lookup topics: One would entail optimizing a single transistor, whilst the different would be to boost a completely new analog computer. The latter was once the pet mission of an adviser named Yannis Tsividis. “Yanni’s type of satisfied me,” Cowan informed me, sounding as if he wasn’t pretty certain how it happened.

Initially, there had been no specifications, due to the fact no one had ever constructed an analog pc on a chip. Cowan didn’t recognize how correct it should be and was once basically making it up as he went along. He had to take different publications at Columbia to fill the gaps in his knowledge. Two years later, he had a check chip that, he instructed me modestly, was once “full of graduate-student naivete. It seemed like a breadboarding nightmare.” Still, it worked, so he determined to stick around and make a higher version. That took any other two years.

A key innovation of Cowan’s was once making the chip reconfigurable—or programmable. Old-school analog computer systems had used clunky patch cords on plug boards. Cowan did the identical element in miniature, between areas on the chip itself, the usage of a preexisting science regarded as transmission gates. These can work as solid-state switches to join the output from processing block A to the entry of block B, block C, or any different block you choose.

His 2nd innovation was once to make his analog chip well-matched with an off-the-shelf digital computer, which may want to assist to steer clear of limits on precision. “You ought to get an approximate analog answer as a beginning point,” Cowan explained, “and feed that into the digital laptop as a guess, due to the fact iterative routines converge quicker from a desirable guess.” The cease end result of his terrific labor used to be etched onto a silicon wafer measuring a very decent 10 millimeters through 10 millimeters. “Remarkably,” he informed me, “it did work.”

When I requested Cowan about real-world uses, inevitably he stated AI. But I’d had some time to assume about neural nets and used to be starting to sense skeptical. In a widespread neural internet setup, regarded as a crossbar configuration, every telephone on the internet connects with 4 different cells. They might also be layered to permit for more connections, however, even so, they’re ways much less complicated than the frontal cortex of the brain, in which every person’s neuron can be related to 10,000 others. Moreover, talent is no longer a static network. During the first yr of life, new neural connections shape at a charge of 1 million per second. I noticed no way for a neural community to emulate tactics like that.

GLENN COWAN’S SECOND analog chip wasn’t the quit of the story at Columbia. Additional refinements had been necessary, however, Yannis Tsividis had to wait for some other graduate scholar who would proceed with the work.

In 2011 a soft-spoken younger man named Ning Guo became out to be willing. Like Cowan, he had in no way designed a chip before. “I located it, um, exceptionally challenging,” he informed me. He laughed at the reminiscence and shook his head. “We have been too optimistic,” he recalled ruefully. He laughed again. “Like we idea, we should get it completed by using the summer.”

In fact, it took extra than a yr to entire the chip design. Guo stated Tsividis had required a “90 percentage self-belief level” that the chip would work earlier than he would proceed with the pricey technique of fabrication. Guo took a chance, and the end result he named the HCDC, which means hybrid non-stop discrete computer. Guo’s prototype was once then included on a board that may want to interface with an off-the-shelf digital computer. From the outside, it seemed like an accent circuit board for a PC.

When I requested Guo about viable applications, he had to assume for a bit. Instead of bringing up AI, he counseled duties such as simulating a lot of shifting mechanical joints that would be rigidly linked to every difference in robotics. Then, in contrast to many engineers, he allowed himself to speculate.

There are diminishing returns on the digital model, he said, but it nevertheless dominates the industry. “If we utilized as many human beings and as lots cash to the analog domain, I assume we should have some type of analog coprocessing taking place to speed up the current algorithms. Digital computer systems are very suitable for scalability. Analog is very correct in complicated interactions between variables. In the future, we may additionally mix these advantages.”

THE HCDC WAS absolutely functional, however, it had a problem: It used to be no longer effortless to use. Fortuitously, a proficient programmer at MIT named Sara Achour study about the venture and noticed it as a perfect goal for her skills. She used to be an expert in compilers—programs that convert a high-level programming language into laptop language—and may want to add an extra trouble-free front give up in Python to assist human beings in software the chip. She reached out to Tsividis, and he despatched her one of the few valuable boards that had been fabricated.

When I spoke with Achour, she was once interesting and engaging, turning in terminology at a manic pace. She informed me she had, in the beginning, meant to be a physician however switched to laptop science after having pursued programming as an interest considering that center school. “I had specialized in math modeling of organic systems,” she said. “We did macroscopic modeling of gene protein hormonal dynamics.” Seeing my clean look, she added: “We have been making an attempt to predict matters like hormonal modifications when you inject anybody with a specific drug.”

Changes were once the keyword. She used to completely acquainted with the math to describe change, and after two years she completed her compiler for the analog chip. “I didn’t build, like, an entry-level product,” she said. “But I made it less complicated to discover resilient implementations of the computation you favor to run. You see, even the human beings who format this kind of hardware have challenges programming it. It’s nevertheless extraordinarily painful.”

I preferred the notion of a former clinical pupil assuaging the ache of chip designers who had issues with the use of their personal hardware. But what used to be her take on applications? Are there any?

“Yes, on every occasion you’re sensing the environment,” she said. “And reconfigurability lets you reuse the identical piece of hardware for more than one computation. So I don’t suppose this is going to be relegated to an area of interest model. Analog computation makes a lot of feels when you’re interfacing with something that is inherently analog.” Like the actual world, with all its muzziness.

GOING BACK TO the thinking of shedding a ball, and my activity in discovering how a way it travels at some stage in a duration of time: Calculus solves that hassle easily, with a differential equation—if you omit air resistance. The perfect time period for this is “integrating pace with appreciate of time.”

But what if you don’t bypass air resistance? The quicker the ball falls, the extra air resistance it encounters. But gravity stays constant, so the ball’s velocity doesn’t extend at a consistent price however tails off till it reaches terminal velocity. You can specify this in a differential equation too, however, it provides every other layer of complexity. I won’t get into the mathematical notation (I opt to keep away from the ache of it, to use Sara Achour’s memorable term), due to the fact, the take-home message is all that matters. Every time you introduce any other factor, the situation receives greater complicated. If there’s a crosswind, or the ball collides with different balls, or it falls down a gap to the core of the Earth, the place gravity is zero—the state of affairs can get discouragingly complicated.

Now believe you prefer to simulate the situation of the usage of a digital computer. It’ll want a lot of information factors to generate a clean curve, and it’ll have to consistently recalculate all the values for every point. Those calculations will add up, particularly if more than one objects come to be involved. If you have billions of objects—as in a nuclear chain reaction, or synapse states in an AI engine—you’ll want a digital processor containing perhaps a hundred billion transistors to crunch the facts at billions of cycles per second. And in every cycle, the switching operation of every transistor will generate heat. Waste warmth will become a serious issue.

Using a new-age analog chip, you simply specify all the elements in a differential equation and kind it into Achour’s compiler, which converts the equation into computer language that the chip understands. The brute pressure of binary code is minimized, and so is the energy consumption and the heat. The HCDC is like an environment-friendly little helper living secretly amid the cutting-edge hardware, and it’s chip-sized, not like the room-sized behemoths of yesteryear.

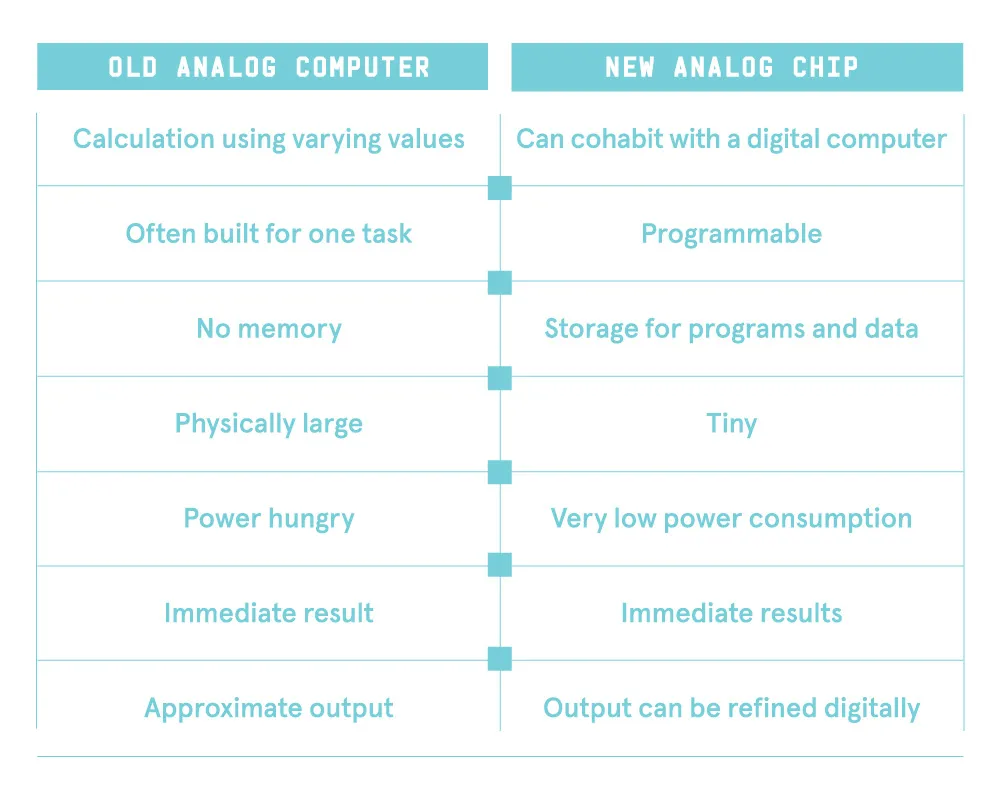

Now I have to replace the simple analog attributes:

You can see how the designs through Tsividis and his grad college students have addressed the ancient risks in my preceding list. And yet, regardless of all this, Tsividis—the prophet of modern-day analog computing—still has a problem getting humans to take him seriously.

BORN IN GREECE in 1946, Tsividis developed an early dislike for geography, history, and chemistry. “I felt as if there have been extra statistics to memorize than I had synapses in my brain,” he instructed me. He cherished math and physics however ran into a specific hassle when an instructor guaranteed him that the perimeter of any circle was once in three instances the diameter plus 14 centimeters. Of course, it has to be (approximately) 3.14 instances the diameter of the circle, however, when Tsividis stated so, the instructor advised him to be quiet. This, he has said, “suggested instead strongly that authority figures are no longer usually right.”

He taught himself English, commenced gaining knowledge of electronics, designed and constructed gadgets like radio transmitters, and finally fled from the Greek university system that had compelled him to study natural chemistry. In 1972 he started graduate research in the United States, and over the years grew to become recognized for difficult orthodoxy in the discipline of pc science. One ordinary circuit dressmaker referred to him as “the analog MOS freak,” after he designed and fabricated an amplifier chip in 1975 the usage of metal-oxide-semiconductor technology, which without a doubt no one believed was once appropriate for the task.

These days, Tsividis is well-mannered and down to earth, with no pastime for losing words. His strive to convey returned analog in the structure of built-in chips started out in earnest in the late ’90s. When I talked to him, he advised me he had 18 boards with analog chips set up on them, a couple extra having been loaned out to researchers such as Achour. “But the venture is on maintain now,” he said, “because the funding ended from the National Science Foundation. And then we had two years of Covid.”

I requested what he would do if he received new funding.

“I would want to know, if you put collectively many chips to mannequin a giant system, then what happens? So we will strive to put collectively many of these chips and eventually, with the assistance of silicon foundries, make a giant pc on a single chip.”

I pointed out that improvement so a way has already taken nearly 20 years.

“Yes, however, there have been numerous years of breaks in between. Whenever there is suitable funding, I revive the process.”

I requested him whether or not the kingdom of analog computing these days may want to be in contrast to that of quantum computing 25 years ago. Could it comply with a comparable direction of development, from fringe consideration to frequent (and well-funded) acceptance?

It would take a fraction of the time, he said. “We have our experimental results. It has confirmed itself. If there is a crew that needs to make it user-friendly, within 12 months we should have it.” And at this factor, he is inclined to grant analog pc boards to involved researchers, who can use them with Achour’s compiler.

What type of humans would qualify?

“The historical past you want is now not simply computers. You surely want the math historical past to recognize what differential equations are.”

I requested him whether or not he felt that his notion was, in a way, obvious. Why hadn’t it resonated with extra people?

“People do marvel why we are doing this when the entirety is digital. They say digital is the future, digital is the future—and of direction, it’s the future. But the bodily world is analog, and in between, you have a huge interface. That’s the place this fits.”

WHEN TSIVIDIS MENTIONED offhandedly that humans making use of analog computation would want a fabulous math background, I began to wonder. Developing algorithms for digital computer systems can be a strenuous intellectual exercise, however, calculus is seldom required. When I started this with Achour, she laughed and stated that when she submits papers to reviewers, “Some of them say they haven’t viewed differential equations in years. Some of them have by no means viewed differential equations.”

And no doubt a lot of them won’t prefer to. But economic incentives have a way of overcoming resistance to change. Imagine a future the place software program engineers can command a greater $100K per annum by using including a new bullet factor to a résumé: “Fluent in differential equations.” If that happens, I’m questioning that Python builders will quickly be signing up for remedial online calculus classes.

Likewise, in business, the identifying element will be financial. There’s going to be a lot of cash in AI—and in smarter drug molecules, in agile robots, and in a dozen different purposes that mannequin the muzzy complexity of the bodily world. If electricity consumption and warmth dissipation come to be definitely costly problems, and shunting some of the digital load into miniaturized analog coprocessors is substantially cheaper, then no one will care that analog computation used to be completed through your math-genius grandfather the usage of a large metal field full of vacuum tubes.

Reality certainly is imprecise, no relying on how plenty I would opt for otherwise, and when you choose to mannequin it with absolutely superb fidelity, digitizing it might also no longer be the best method. Therefore, I have to conclude:

The Analog is dead.

Long stay analog.